Source : https://dzone.com/articles/5-open-source-machine-learning-frameworks-and-tool

The list below describes five of the most popular open-source machine learning frameworks, what they offer, and what use cases they can best be applied to.

Practical machine learning development has advanced at a remarkable pace. This is reflected by not only a rise in actual products based on, or offering, machine learning capabilities but also a rise in new development frameworks and methodologies, most of which are backed by open-source projects.

In fact, developers and researchers beginning a new project can be easily overwhelmed by the choice of frameworks offered out there. These new tools vary considerably — and striking a balance between keeping up with new trends and ensuring project stability and reliability can be hard.

The list below describes five of the most popular open-source machine learning frameworks, what they offer, and what use cases they can best be applied to.

1. TensorFlow

Made public and open-sourced two years ago, TensorFlow is Google's own internal framework for deep learning (artificial neural networks). It allows you to build any kind of neural network (and other computational models) by stacking the typical mathematical operations for NNs in a "computational graph."

The magic happens when the model is trained, and TensorFlow knows how to back-propagate errors through the computational graph, thus learning the correct parameters. TensorFlow is general enough to be used for building any type of network — from text to image classifiers, to more advanced models like generative adversarial neural networks (GANs), and even allows other frameworks to be built on top of it (see Keras and Edward down the list).

True, we could have selected other prominent deep learning frameworks that are equally as effective as TensorFlow-like Theano, Torch, or Caffe — but TensorFlow has risen in popularity so quickly that it's now arguably the de facto industry standard for deep learning (and yes, being developed and used by Google itself does lend it extra kudos.) It also has the most active and diverse ecosystem of developers and tools of all the DL frameworks.

Another consideration is that since deep learning frameworks are evolving on a daily basis and are often buggy, it's important to know that your Stack Overflow search will yield results-and almost every bug and difficulty in TensorFlow is "Stack Overflow solvable."

The main pitfall many users cite against TensorFlow is its intrinsic difficulty of use, due in part to the fact that the framework is so customizable and "down to the metal" of every little detail, almost every computation in the neural network you're building needs to be explicitly programmed into the model. Some may view this as a benefit, but unless you're very familiar with every implementation detail of the model you're trying to build (differences between activation functions, matrix multiplication dimensionality, etc.), TensorFlow may become burdensome. Users who wish to work with more high-level models will enjoy Keras, which is next on our list.

2. Keras

Keras is a high-level interface for deep learning frameworks like Google's TensorFlow, Microsoft's CNTK, Amazon's MXNet, and more. Initially built from scratch by François Chollet in 2015, Keras quickly became the second-fastest growing deep learning framework after TensorFlow. This is easily explained by its declared purpose: to make drafting new DL models as easy as writing new methods in Python. This is why, for anyone who has struggled with building models using TensorFlow at all, Keras is nothing short of revolutionary-it allows you to easily create common types of neuron layers, select metrics, error function, and optimization method, and to train the model quickly and easily.

Its main power lies in its modularity: Almost every neural building block is available in the library (which is regularly updated with new ones), and they can all be easily composed on top of one another, to create more customized and elaborate models.

Some cool recent projects that use Keras are Object Detection and Segmentation by Matterport, and this cool grayscale image colorization model. Uber has also recently revealed in a post that their distributional deep learning framework, Horovod, also supports Keras for fast prototyping.

3. SciKit-Learn

With the recent explosion of deep learning, one may get the impression that other, more "classic" machine learning models are no longer in use. This is far from true — in fact, many common machine learning tasks can be solved using traditional models that were industry standard before the deep learning boom-and with much greater ease. While deep learning models are great at capturing patterns, it's often hard to explain what and how they have learned, an important requirement in many applications (see Lime, below, for model explanations). Plus, they're often very computationally expensive to train and deploy. Also, standard problems like clustering, dimensionality reduction, and feature selection can often be solved more easily using traditional models.

SciKit-learn is an academia-backed framework that has just celebrated its tenth anniversary. It contains almost every machine learning model imaginable — from linear and logistic regressors to SVM classifiers and random forests — and it has a huge toolbox of preprocessing methods like dimensionality reduction, text transformations, and many more.

In our humble opinion, SciKit-learn is truly one of the greatest feats of the Python community, if only for its documentation — some of the best docs we've seen for any Python package. In fact, its user guide is so good that you could use it as a textbook for data science and machine learning. Any start-up about to jump into deep learning waters should first consider opting for a SciKit model that may provide similar performance, while also saving development time.

4. Edward

Edward is one of the most promising and intriguing projects seen in the community in a while.

Created by Dustin Tran, a Ph.D. student at Columbia University and a researcher at Google, alongside a group of AI researchers and contributors, Edward is built on top of TensorFlow and fuses three fields: Bayesian statistics and machine learning, deep learning, and probabilistic programming.

It allows users to construct probabilistic graphical models (PGM). It can be used to build Bayesian neural networks, along with almost every model that can be represented as a graph and uses probabilistic representations. Its uses are still limited more to hardcore AI models than to real-world production, but since PGMs are proving increasingly useful in AI research, we can assume that practical uses will be found for these models in the near future.

Recently, Edward reached another impressive milestone, announcing that it will be officially integrated into the TensorFlow code base.

5. Lime

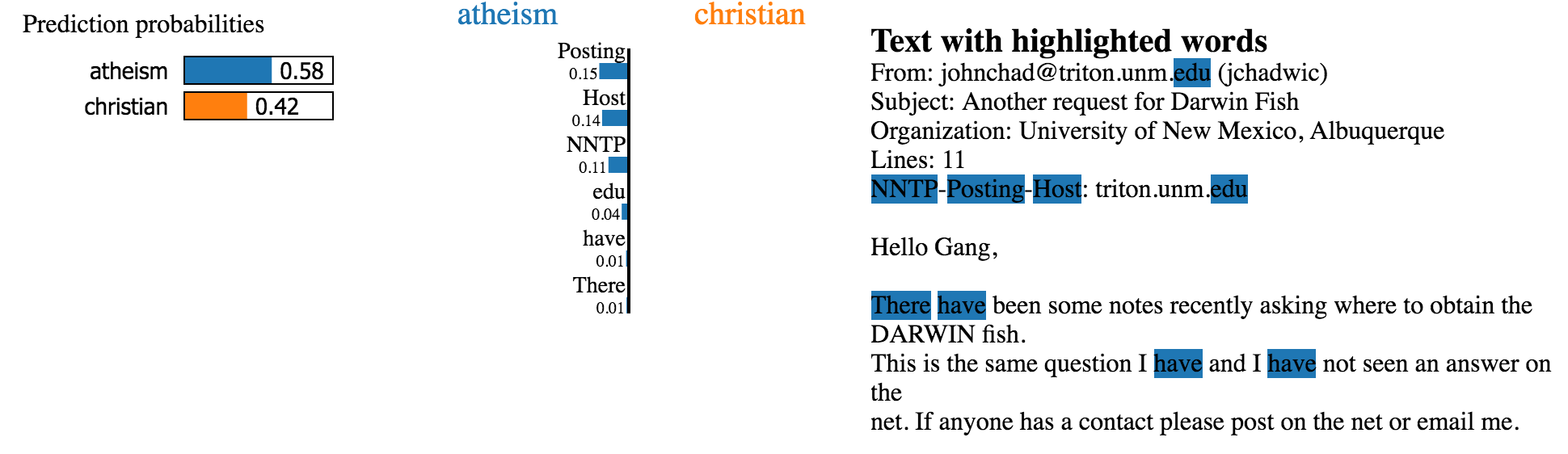

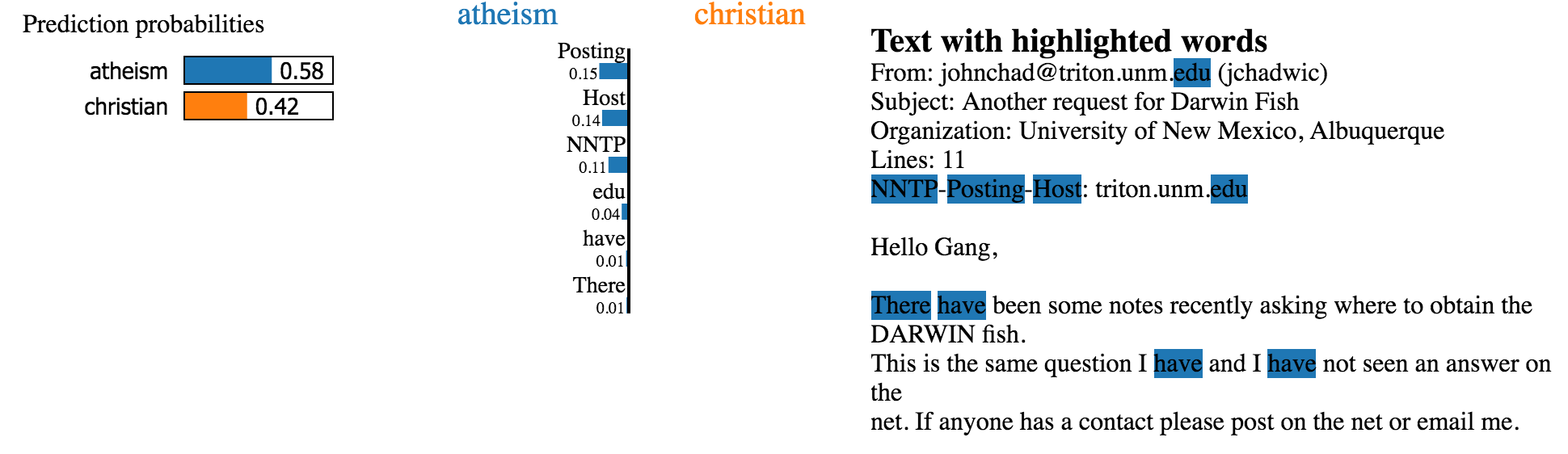

One of the biggest challenges with machine learning is explaining what your model has learned —in other words, debugging its internal representations. Say you've built a text classifier that works pretty well, but fails embarrassingly for some sentences. For example, you'd like to know which words in the sentence "Apple iPad Pro with red case" caused the model to classify the device as a surfboard instead of a tablet — finding out that the word "red" is more typical to surfboards than to smartphones, leading the model to a mistake. This can be done by inspecting the model's internal weights for each word in its vocabulary, a process that may become cumbersome for more complicated models with a lot of features and weights.

Lime is an easy-to-use Python package that does this for you in a more intelligent way. Taking a constructed model as input, it runs a second "meta" approximator of the learned model, which approximates the behavior of the model for different inputs. The output is an explainer for the model, identifying which parts of any input helped the model reach a decision and which didn't.

It then displays the results in a readable and interpretable way — for example, an explanation for a text classifier would look like this, highlighting the words that helped the model reach a decision, and their respective probabilities:

Image taken from project’s GitHub page

Lime supports — learn models out of the box, as it does for any classifier model that takes in raw text or an array, and outputs a probability for each class. It can also explain image classification models. The following screenshot explains a classifier that learned to distinguish between cats and dogs. In the screenshot, Lime explains which areas in the image were used by the model for classifying the image as "cat" (the green zone), and which areas had a negative weight, causing it to lean toward a "dog" classification.

Image taken from project’s GitHub page

Endnotes

We have listed here three major frameworks and two promising tools for machine learning researchers and engineers to consider when approaching a project, either by building it from scratch or trying to improve it.

As the practical machine learning development world advances, we hope to see more mature frameworks and tools. In a future post, we will list even more advanced and neat tools specially created for machine learning engineers.